A docker setup can be very helpful when trying to separate services if they are not packaged otherwise. We don’t only want to separate configuration in this post, but also the network configuration.

As docker has its own network stack we can route the traffic from containers. Usually it is difficult to tell a specific process to use only a specific interface. Most of the time a proxy within the Virtual Private Network is used to achieve this. This has also the benefit that, if the network interface does down and the routing rules are reset, then the traffic is not sent though some other default interface.

In this post we take the “proxy idea” to the next level. We will route the traffic of a whole docker container though a specific interface. If the interface goes down then the docker container is not allowed to communicate through any other interface.

Configuration of Docker

First configure docker such that it does not get into our way in /etc/docker/daemon.json:

1{

2 "dns": ["1.1.1.1"]

3}

Depending on your docker setup you may not need this.

Configuration of Docker Network

First we create a new docker network such that we can use proper interface names in our configuration and previously installed containers are not affected.

1docker network create \

2 -d bridge \

3 -o 'com.docker.network.bridge.name'='vpn' \

4 --subnet=172.18.0.1/16 vpn

The network create action creates a new interface on the host with 172.18.0.1/16 as subnet. It will be called vpn within docker and Linux.

You can validate the settings by checking ip a:

12: vpn: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default

2 link/ether 02:42:d8:16:bd:f4 brd ff:ff:ff:ff:ff:ff

3 inet 172.18.0.1/16 brd 172.18.255.255 scope global vpn

4 valid_lft forever preferred_lft forever

5 inet6 fe80::42:d8ff:fe16:bdf4/64 scope link

6 valid_lft forever preferred_lft forever

The docker host gets the IP 172.18.0.1.

Setting up a Docker Container

Next we will create docker contains within the created subnet.

1docker pull ubuntu

2docker create \

3 --name=network_jail \

4 --network vpn \

5 --ip 172.18.0.2 \

6 -t -i \

7 ubuntu

Now lets chroot into the container:

1docker start -i network_jail

2apt update && apt install curl iproute2

3ip a

and look at the configuration:

167: eth0@if68: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default

2 link/ether 02:42:ac:12:00:02 brd ff:ff:ff:ff:ff:ff link-netnsid 0

3 inet 172.18.0.2/16 brd 172.18.255.255 scope global eth0

4 valid_lft forever preferred_lft forever

We can also test the connection to the internet with curl -4 ifconfig.co.

Routing a Docker Container through an OpenVPN Interface

The next step is to setup the routes which traffic from 172.18.0.0/16 through a vpn. We use OpenVPN here as it is wildly used. OpenVPN offers a way to setup routes with a --up and --down script. First we tell OpenVPN not to mess with the routing in any way with pull-filter ignore redirect-gateway. Here is a sample OpenVPN config to use with this setup:

1client

2dev tun0

3proto udp

4remote example.com 1194

5

6auth-user-pass /etc/openvpn/client/auth

7auth-retry nointeract

8

9pull-filter ignore redirect-gateway

10script-security 3

11up /etc/openvpn/client/vpn-up.sh

12down /etc/openvpn/client/vpn-down.sh

The vpn-up.sh script has several parameters.

| Pararmeter | Description | Example |

|---|---|---|

docker_net |

The vpn docker subnet | 172.18.0.0/16 |

local_net |

Some local network you want to route over eth0 | 192.168.178.0/24 |

local_gateway |

The gateway of the local network | 192.168.178.1 |

trusted_ip |

Set by OpenVPN to the IP of the OpenVPN endpoint | 11.11.11.11 |

dev |

Set by OpenVPN to OpenVPN interface | tun0 |

Note that eth0 is used here as interface over which OpenVPN makes a connection. Furthermore in my setup a private LAN is behind eth0.

1#!/bin/sh

2docker_net=172.18.0.0/16

3local_net=192.168.178.0/24

4local_gateway=192.168.178.1

5

6# Checks to see if there is an IP routing table named 'vpn', create if missing

7if [ $(cat /etc/iproute2/rt_tables | grep vpn | wc -l) -eq 0 ]; then

8 echo "100 vpn" >> /etc/iproute2/rt_tables

9fi

10

11# Remove any previous routes in the 'vpn' routing table

12/bin/ip rule | /bin/sed -n 's/.*\(from[ \t]*[0-9\.\/]*\).*vpn/\1/p' | while read RULE

13 do

14 /bin/ip rule del ${RULE}

15 /bin/ip route flush table vpn

16 done

17

18 # Add route to the VPN endpoint

19 /bin/ip route add $trusted_ip via dev eth0

20

21 # Traffic coming FROM the docker network should go thought he vpn table

22 /bin/ip rule add from ${docker_net} lookup vpn

23

24 # Uncomment this if you want to have a default route for the VPN

25 # /bin/ip route add default dev ${dev} table vpn

26

27 # Needed for OpenVPN to work

28 /bin/ip route add 0.0.0.0/1 dev ${dev} table vpn

29 /bin/ip route add 128.0.0.0/1 dev ${dev} table vpn

30

31 # Local traffic should go through eth0

32 /bin/ip route add $local_net dev eth0 table vpn

33

34 # Traffic to docker network should go to docker vpn network

35 /bin/ip route add $docker_net dev vpn table vpn

36

37 exit 0

Credits go to 0xacab

Here is the explanation for the rules:

| Lines | Explanation |

|---|---|

| 8 | Creates a tables for packets coming from the docker vpn network |

| 14-15 | Resets all the rules coming below by flushing the table |

| 18-19 | Route packets to the OpenVPN endpoint over eth0 |

| 21-22 | Route packets coming from the docker vpn to the vpn table |

| 27-29 | This is a trick by OpenVPN to get highest priority. 1 |

| 34-35 | Route packets going to docker network to the docker network |

By leaving line 25 commented we only routing traffic from the docker vpn network over the OpenVPN.

The down.sh script removes the $trusted_ip which was added during setup.

1#!/bin/bash

2

3local_gateway=192.168.178.1

4

5/bin/ip route del $trusted_ip via $local_gateway dev eth0

Setup IPtables to Reject Packets which Fallback to Another Interface

Finally, we want to avoid that packets go over over the eth0 interface if the OpenVPN on tun0 is down.

1#!/bin/bash

2local_network=192.168.178.0/24

3

4iptables -I DOCKER-USER -i vpn ! -o tun0 -j REJECT --reject-with icmp-port-unreachable

5iptables -I DOCKER-USER -i vpn -o vpn -j ACCEPT

6iptables -I DOCKER-USER -i vpn -d $local_network -j ACCEPT

7iptables -I DOCKER-USER -s $local_network -o vpn -j ACCEPT

8iptables -I DOCKER-USER -m conntrack --ctstate ESTABLISHED,RELATED -j ACCEPT

Basically what this script says is that if traffic is coming from vpn and is routed through tun0 then reject it. Traffic between vpn and vpn is allowed. Traffic to and from the local network is also allowed. The last line is needed such that existing connections are accepted.

These rules usually live at /etc/iptables/rules.v4.

Running curl -4 ifconfig.co inside the container should now show the IP you have when tunneling your traffic through the VPN. If the OpenVPN process is stopped then the curl should timeout.

What is DOCKER-USER?

IPtables rules are a bit of a pain with docker. Docker overwrites the iptables configuration when it starts. So if you want to add rules to the FORWARD chain you have to add the rules to DOCKER-USER instead such that they are not overwritten. You can read more about this in the manual. Basically we are acting here like a router. A IPtables rule like iptables -I DOCKER-USER -i src_if -o dst_if -j REJECT describes how packets are allowed to flow. We are restricting this to a flow between vpn ↔ tun0.

If you want to have a network configuration which does not change you should set "iptables": false in /etc/docker/daemon.json. That way docker does not touch the IPtables rules. Before doing this I first copied the rules from IPtables when all containers are running. After stopping docker and setting the option to false I started the container again and applied the copied rules manually again.

Why Does This Work?

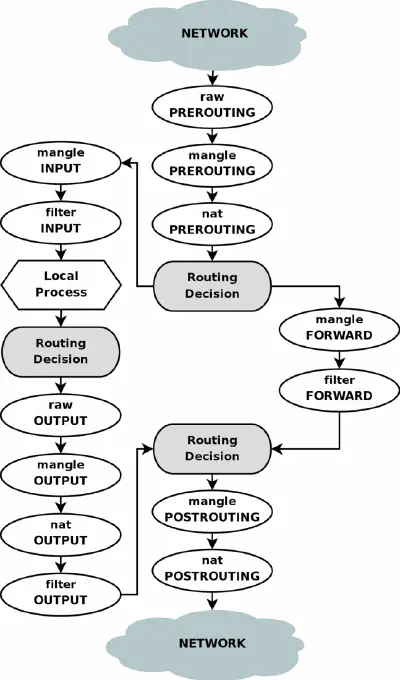

When researching how to do this I sometimes has to lookup how routing and filtering actually works on Linux. Some tries by myself were based on marking packets coming from a specific process and then rejecting them if they are not flowing where they should. A further naive idea is to use the IPtables owner module with --uid-owner (iptables -m owner --help). This does not work with docker though because packets from docker never go though the INPUT, Routing Decision and OUTPUT chain as seen in the figure below.

The packets from docker only go through PREROUTING, Routing Decision, FORWARD, Routing Decision, POSTROUTING. The best point to filter packets is at the FORWARD/DOCKER-USER chain as we can see from where the packet is coming and where it is going. Filtering by processes only works in the left part of the figure where the concept of Local Processes exists.

References

- Good post about routing tables.

- If you are interested in WireGuard you can read here more.

Further notes

-

It’s just a clever hack/trick.

There’s actually TWO important extra routes the VPN adds:

128.0.0.0/128.0.0.0 (covers 0.0.0.0 thru 127.255.255.255) 0.0.0.0/128.0.0.0 (covers 128.0.0.0 thru 255.255.255.255)

The reason this works is because when it comes to routing, a more specific route is always preferred over a more general route. And 0.0.0.0/0.0.0.0 (the default gateway) is as general as it gets. But if we insert the above two routes, the fact they are more specific means one of them will always be chosen before 0.0.0.0/0.0.0.0 since those two routes still cover the entire IP spectrum (0.0.0.0 thru 255.255.255.255).

VPNs do this to avoid messing w/ existing routes. They don’t need to delete anything that was already there, or even examine the routing table. They just add their own routes when the VPN comes up, and remove them when the VPN is shutdown. Simple.

Source: http://www.dd-wrt.com/phpBB2/viewtopic.php?t=277001 ↩︎